Note: the network was originally installed with the 3rd Ed. it has since been upgraded to the 4th Ed. See our upgrade page for details.

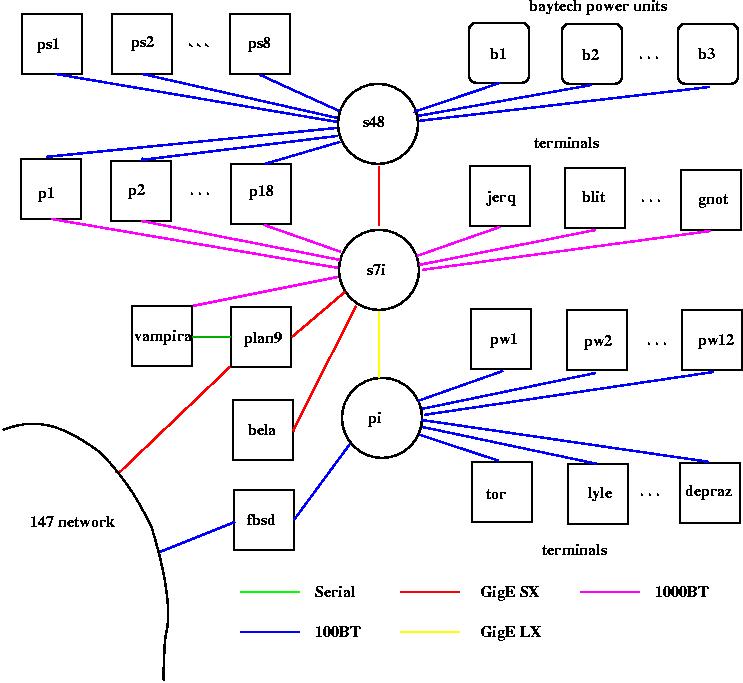

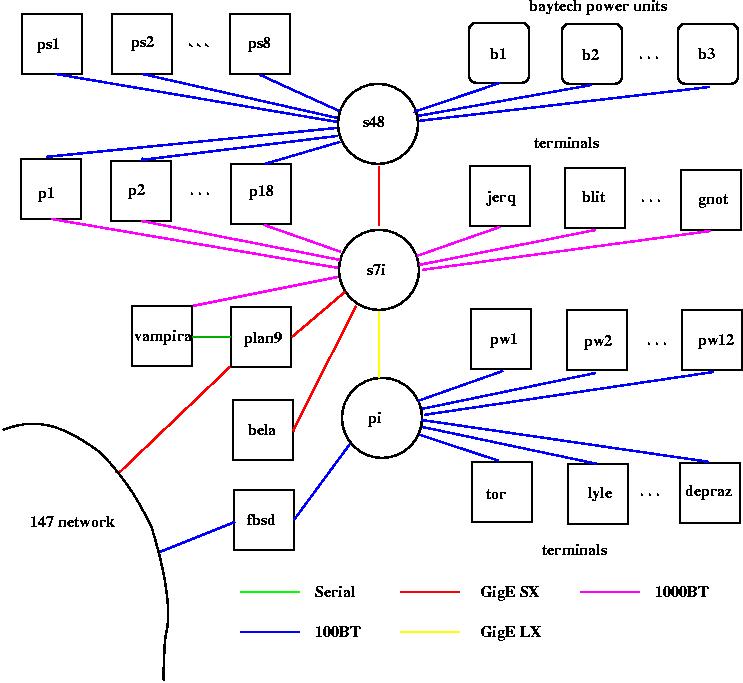

Below is a layout of the ACL plan9 network. The CPU/authentication server acts as a portal to the outside world, and contains two Gigabit ethernet interfaces. One interface goes to the outside world( "147" network), and the other goes to a Summit 7i GigE switch on the plan9.lanl.net internal plan9 network. The FreeBSD machine fbsd.acl.lanl.gov is also a bridge between the external and internal networks.

On the internal network there is a file server, various terminals and cpu servers. In the figure "s7i" is the Summit7i 32 port GigE switch(28 copper ports and 3 SX fiber ports and 1 LX fiber port). "s48" is a Summit48, 48 port 100BT network switch with SX gigE uplink. "pi" is a 20 port packet engine 100BT network switch with LX gigE uplink. The baytech power units are attached to the Compaq DL360 machines: p1-p18, ps1-ps8, and fbsd, to allow remote power management.

1) setup the standalone cpu/auth server

2) setup the fileserver server

3) Do a base install of secondary cpu servers, first powerwall machine (others are clones), and terminals.

4) Configure the fileserver to support the booting of terminals, secondary cpu servers, and the primary cpu/auth server. Next, boot the primary and secondary fileservers off of the fileserver.

5) Configure the cpu/auth server to setup mail, printing, timezone, and users.

6) Install software on fileserver and standalone terminals.

7) Customize standalone terminals , secondary cpu servers , cluster , and powerwall machines.

The cpu/auth server is a left over frontend from a linux cluster we were salvaging, it's hardware config is:

Compaq SP700 workstation 2 550Mhz PentiumIII Xeon cpus 2 Gbytes of RAM 8Mb Matrox Millennium II PCI graphics card Integrated Intel i82557 10/100 ethernet 2 Alteon Acenic SX Gigabit ethernet cards Integrated Dual Symbios sd53c875 SCSI controllers 9Gb root disk SGI 1600SW Flatpanel monitor w/ multisync adaptor

This is the only machine that lives on the external network. The secondary network interface is hooked to the external network the primary interface is connected to the unrouted network. However all hosts on the internal network can access the external network by using the command:

import plan9 /net.altand then using addresses like:

ping /net.alt/icmp!acl.lanl.gov ssh /net.alt/tcp!acl.lanl.govActually if you are willing to wait 30 seconds or so for the timeout you don't need to use the /net.alt/ prefix. Or if you only want to use the external interface in a given namespace you can import the external interface on top of the internal interface, and the use "normal" addresses:

import plan9 /net.alt import plan9 /net.alt /net or bind /net.alt /netWhich is the method we commonly use.

For details on how we configured the cpu server

See the cpu server setup page.

Our initial plans called for using another leftover Compaq SP 700 as the file server machine. However because of problems with this machine, we ended up using different hardware:

Dell 610 workstation

2 500Mhz PentiumIII Xeon cpus

1 Gbyte of RAM

Diamond AGP graphics card

Integrated 3Com 3c905B 10/100 ethernet

1 Alteon Acenic Copper Gigabit ethernet card

Integrated Dual Adaptec SCSI controllers(disabled)

LSI logic/Symbios SCSI controller

9Gb SCSI disk on id=0

36Gb IBM SCSI disk on id=1

36Gb IBM SCSI disk on id=2

36Gb IBM SCSI disk on id=3

The fileserver machine uses the 36G drives as a pseudo-worm.

See our fileserver setup page for details on how we configured the fileserver.

1 Thinkpad T23: Jerq

1 Thinkpad T21: Blit

2 Thinkpad 600Es: Monkeyboy and u1

4 Thinkpad 570s: Depraz, Gnot, Tor, and Lyle

We have also used Thinkpad 760s, 760E, 560s and 560Es as terminals in the past, but given that they are slow and only have 2 mouse buttons they have been added to the scrap heap.

What is the deal with the strange names ?

See our terminal setup page for details on how we configured the terminals.

Compaq SP700 workstation 2 550Mhz PentiumIII Xeon cpus 2 Gbytes of RAM 32Mb Nividia geforce 2 AGP graphics card 8Mb Matrox Millennium II PCI graphics card ( no longer used) Integrated Intel i82557 10/100 ethernet Alteon Acenic SX Gigabit ethernet card Integrated Dual Symbios sd53c875 SCSI controllers 9Gb root disk Soundblaster 16 ISA soundcard TDK CDrom writer SGI 1600SW Flatpanel monitor w/ multisync adaptor WinTV tv tuner cardWe use this machine for running mail clients and other things we don't want to run on the gateway machine. It also serves as a general purpose cpu server.

The other secondary cpu server "sound" is a Compaq AP200 workstation.

440 Mhz PII cpu

384 Mbyte of ram

4Mb Matrox Millennium II PCI graphics card

Intel i82557 10/100 ethernet

Soundblaster 16 ISA soundcard

An old secondary cpu server was a DEC alpha PC164 clone.

However, it has be scraped.

DEC alpha EV5 @ 466Mhz.

512Mb of RAM

1 DEC DE500 100BT network card

1 Kingston Tulip 100BT network card

See our Secondary cpu server notes for details on how we configured these machines.

We have a dozen IBM Thinkpad 760's that are setup as cpu servers in powerwall configuration. These machines are used as a testbed to develop message passing software .

See our powerwall setup page for details.

We have a rack of 26 Compaq DL360s That are used as a testbed to develop message passing software . Each node has the following configuration:

800Mhz PIII 512Mb of RAM 2 onboard EEpro100B ethernet18 of the nodes (p1-p18) currently have Alteon Copper GigE ethernet interfaces. The other 8 nodes (ps1-ps8) use the onboard 100BT ethernet.

See our cluster setup page for some details.

If you did not login using the network passwd on the remote terminal, you can tell the terminal about your passwd using the auth/iam command so you can login to the network. (if you don't enter you passwd you can login using the netkey auth protocol rather than the normal plan9 protocol) To import your homedir:

import -C plan9.acl.lanl.gov /usr(-C is so it will use the cfs cache filesystem) To get access to machines behind the gateway:

import plan9.acl.lanl.gov /netOne of the things we want access to is mail. There are problems with running mail on the gateway, so we use the secondary cpu server machine bela. For example to run the faces command we use the function:

fn rfaces { import plan9.acl.lanl.gov /net; cpu -h bela -c faces -i }

To run acmemail we use the function:

fn racmemail { import plan9.acl.lanl.gov /net; cpu -h bela -c acmemail }

which calls the function:

fn acmemail { import plan9 /net.alt; acme -f $font -l acmemail.dump }

That is we import the internal interface of plan9 onto the remote terminal,

use cpu to login to the machine bela, once on bela we import the external

interface of plan9 so we can send mail to the outside world.